Data Centre Bridging Capability Exchange Configuration

Overview

The Data Center Bridging Capability Exchange (DCBx) protocol extends the Link Layer Discovery Protocol (LLDP). It facilitates the exchange of Data Center Bridging (DCB) configuration parameters between directly connected devices, such as switches and network interface cards (NICs). DCBx enables automatic negotiation and configuration of DCB features to ensure consistent quality of service (QoS) and traffic prioritization across a network.

If LLDP is disabled on an interface, DCBX cannot operate on that interface. Attempting to enable DCBX on an interface where LLDP is disabled will result in a configuration commit failure.

DCBx plays a crucial role in modern data centers by enabling seamless configuration and interoperability between DCB-capable devices, ensuring efficient network performance.

Benefits

• Discovers the DCB capabilities of neighboring devices.

• Detects incorrect configurations or mismatches in DCB settings between peers.

• Configures DCB parameters on connected devices for interoperability.

Configuration

Configuring PFC parameter exchange typically involves enabling PFC mode, LLDP, activating DCBX, turning on PFC, and allowing the negotiation of control for each traffic priority (priorities 0–7).

DCBx for PFC via LLDP

The following procedure configures PFC parameters and sent them in LLDP messages.

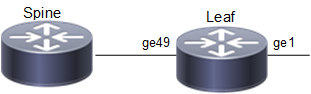

Topology

This topology illustrates a spine-leaf router architecture where PFC parameters are configured and communicated through LLDP messages, enabling the exchange of negotiated priorities among all peers.

DCBx Configuration

Configuring DCBx for PFC via LLDP

The following procedure configures PFC parameters and communicate them to LLDP messages. These configurations can be executed at global or interface level.

1. Set the IP address for interface xe49 on leaf. Following are the sample configurations.

!

interface xe49

ip address 1.1.1.1/24

commit

!

2. Enable the PFC and set a specific priority, advertising and accept mode on interface xe49 at global level. Following are the sample configurations.

!

priority-flow-control mode on

priority-flow-control advertise-local-config

priority-flow-control cap 5

priority-flow-control link-delay-allowance 100

priority-flow-control enable priority 0 1 2 3

lldp-agent

set lldp enable txrx

lldp tlv ieee-8021-org-specific data-center-bridging select

set lldp tx-fast-init 1

dcbx enable

OcNOS(config-if)#commit

!

3. To configure LLDP at interface level, enter into LLDP agent mode and select the set of ieee-8021-org-specific TLV to be included in the LLDP frames. Set the maximum value of LLDP frames that can be transmitted during a fast transmission period.

!

lldp-agent

set lldp enable txrx

lldp tlv ieee-8021-org-specific data-center-bridging select

set lldp tx-fast-init 1

dcbx enable

exit

commit

end

!

For global level configuration, execute the following:

For global level configuration, execute the following:

lldp run

lldp tlv-select basic-mgmt system-name

lldp tlv-select ieee-8021-org-specific data-center-bridging

set lldp timer msg-tx-interval 5

lldp notification-interval 5

Note: To minimize the impact of PFC (Priority Flow Control) updates when operating in Auto mode via DCBx, this custom implementation ensures that PFC priority configurations remain consistent even during peer node reboots or interface flaps.

The mechanism locally caches the received DCBx PFC parameters and prevents unnecessary hardware resets of these values in the following scenarios:

When the LLDP session is re-established after such events, the node compares the newly received PFC parameters with the locally cached values:

The mechanism locally caches the received DCBx PFC parameters and prevents unnecessary hardware resets of these values in the following scenarios:

When the LLDP session is re-established after such events, the node compares the newly received PFC parameters with the locally cached values:

• Peer node reboot or power cycle.

• Peer node software upgrade or downgrade.

• Interface flap or fiber cut.

When the LLDP session is re-established after such events, the node compares the newly received PFC

parameters with the locally cached values:

parameters with the locally cached values:

• If no change is detected, the PFC configuration remains untouched, avoiding reapplication.

• If a difference is detected, the new parameters are applied to ensure proper PFC behavior.

This feature is enabled by default from release 6.6.1 and applies exclusively to nodes operating in PFC Auto mode, requiring no additional configuration.

Key Benefit:

• Minimized Traffic Disruption:

Maintains stable traffic flow with reduced packet loss and network instability during peer node restarts or interface disruptions.

Validation

Verify the DCBX advertisement via. LLDP.

Execute the following show command to display the detailed information about DCBx.

OcNOS#show data-center-bridging remote-details interface xe49

PFC Remote details

interface : xe49

State Willing Cap Priorities

================================================================================

On On 8 2 5 7

Execute the following show command to display the administrative details.

OcNOS#show data-center-bridging admin-details interface xe49

PFC administrative details

interface : xe49

State advertise willing cap syncd priorities

================================================================================

On On On 8 On 2 5 7

Execute the following show command to display the operational details.

OcNOS#show data-center-bridging operational-details interface xe49

PFC Operational details

interface : xe49

state cap syncd priorities

================================================================================

On 8 On 2 5 7